Connect Akto with Kubernetes in AWS

Learn how to send API traffic data from your Kubernetes cluster to Akto.

Introduction

Akto needs your staging, production or other environment's traffic to Discover APIs and analyze for AP misconfiguration. It does so by connecting to one of your traffic sources. One such source is your Kubernetes cluster.

Kubernetes is an open-source container orchestration system for automating software deployment, scaling, and management.

You can add Akto DaemonSet to your Kubernetes cluster. It is very lightweight and will run 1 per node in your Kubernetes cluster. It will intercept alll the node traffic and send it to Akto traffic analyzer. Akto's traffic analyzer analyzes this traffic to create your application's APIs request and response, understand API metadata and find misconfigurations. Akto can work with high traffic scale though you can always configure the amount of traffic you want to send to Akto dashboard.

Overview of Akto-Kubernetes setup

This is how your run Akto's traffic collector on your Kubernetes nodes as DaemonSet and send mirrored traffic to Akto.

Setting up Akto Daemonset pod on your K8s cluster

Setup Akto data processor using the guide here

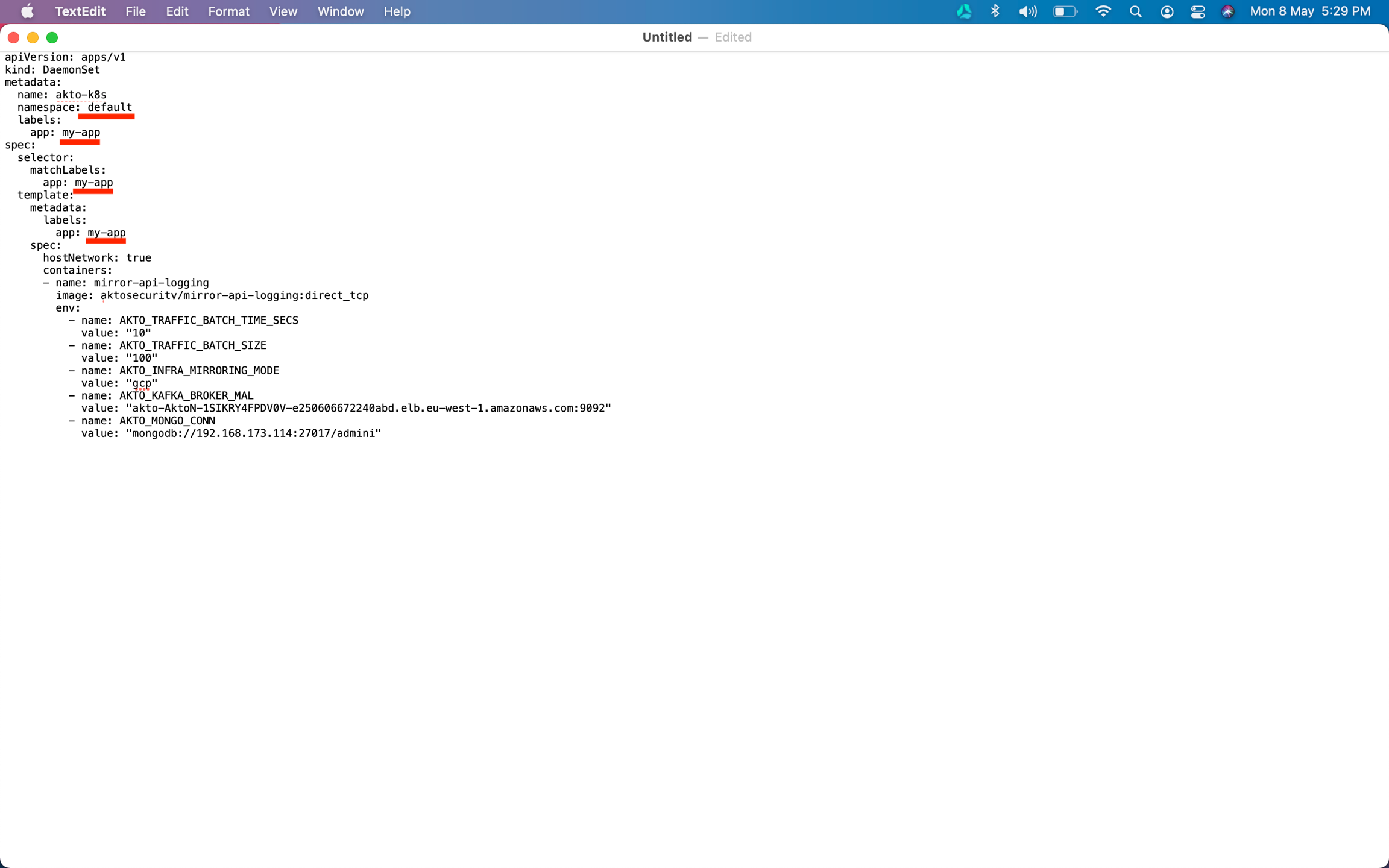

Apply the Daemonset configuration given below using

kubectl apply -f auto-daemon set-config.yaml -n <NAMESPACE>. You will findAKTO_NLB_IPafter setting up Akto data processor, as mentioned above.

Replace

{NAMESPACE}with your app namespace and{APP_NAME}with the name of your app. If you have installed on AWS -

Go to EC2 > Instances > Search for

Akto Mongo Instance> Copy private IP.Go to EC2 > Load balancers > Search for

AktoNLB> Copy its DNS.Replace

AKTO_NLB_IPwith the DNS name. egAktoNLB-ca5f9567a891b910.elb.ap-south-1.amazonaws.com

If you have installed on GCP, Kubernetes or OpenShift -

Get Mongo Service's DNS name from Akto cluster

Get Runtime Service's DNS name from Akto cluster

Replace

AKTO_NLB_IPwith the DNS name. eg.akto-api-security-runtime.p03.svc.cluster.local

Create a file

akto-daemonset-config.yamlwith the above YAML config

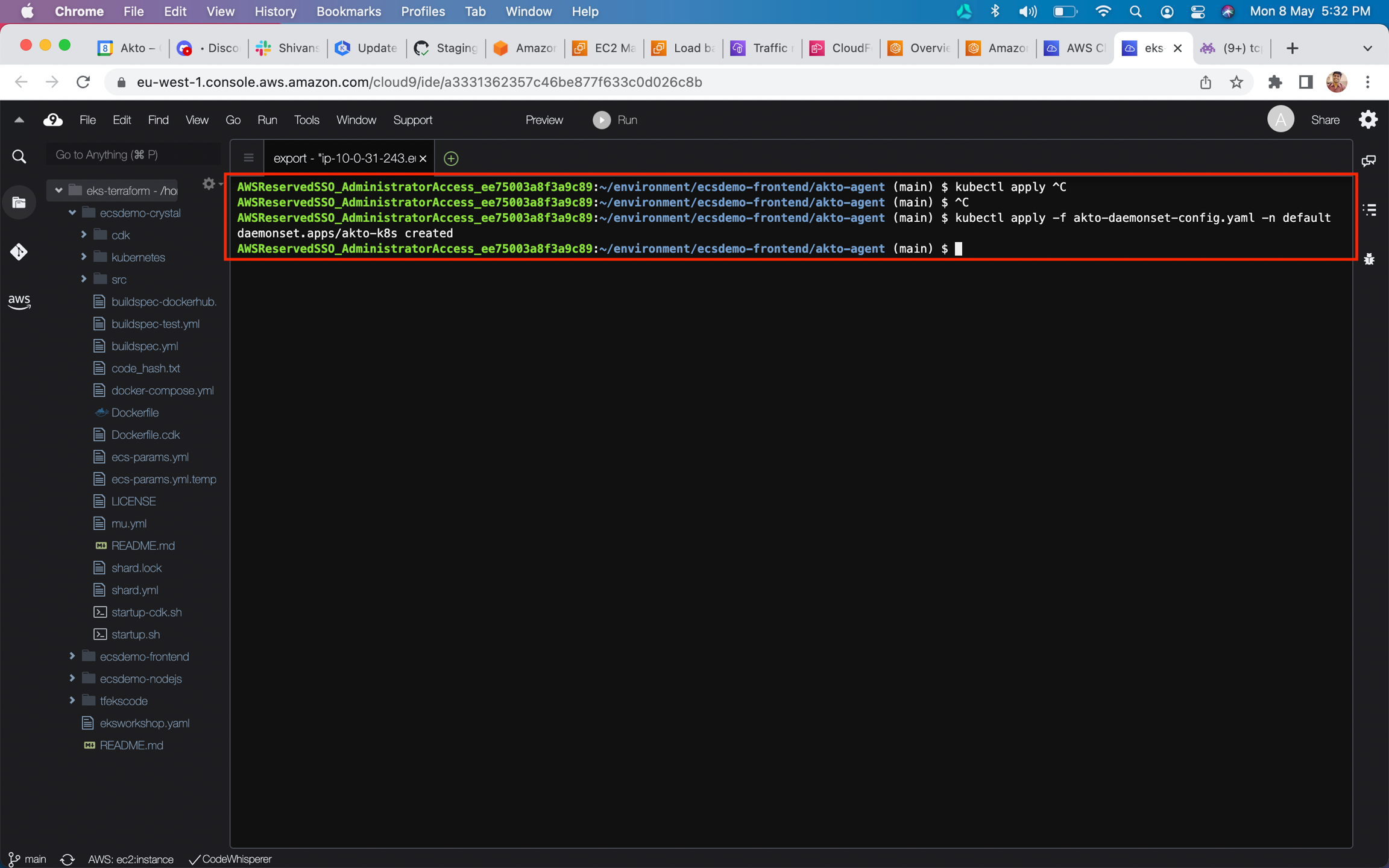

Call

kubectl apply -f akto-daemonset-config.yaml -n <NAMESPACE>on your kubectl terminal

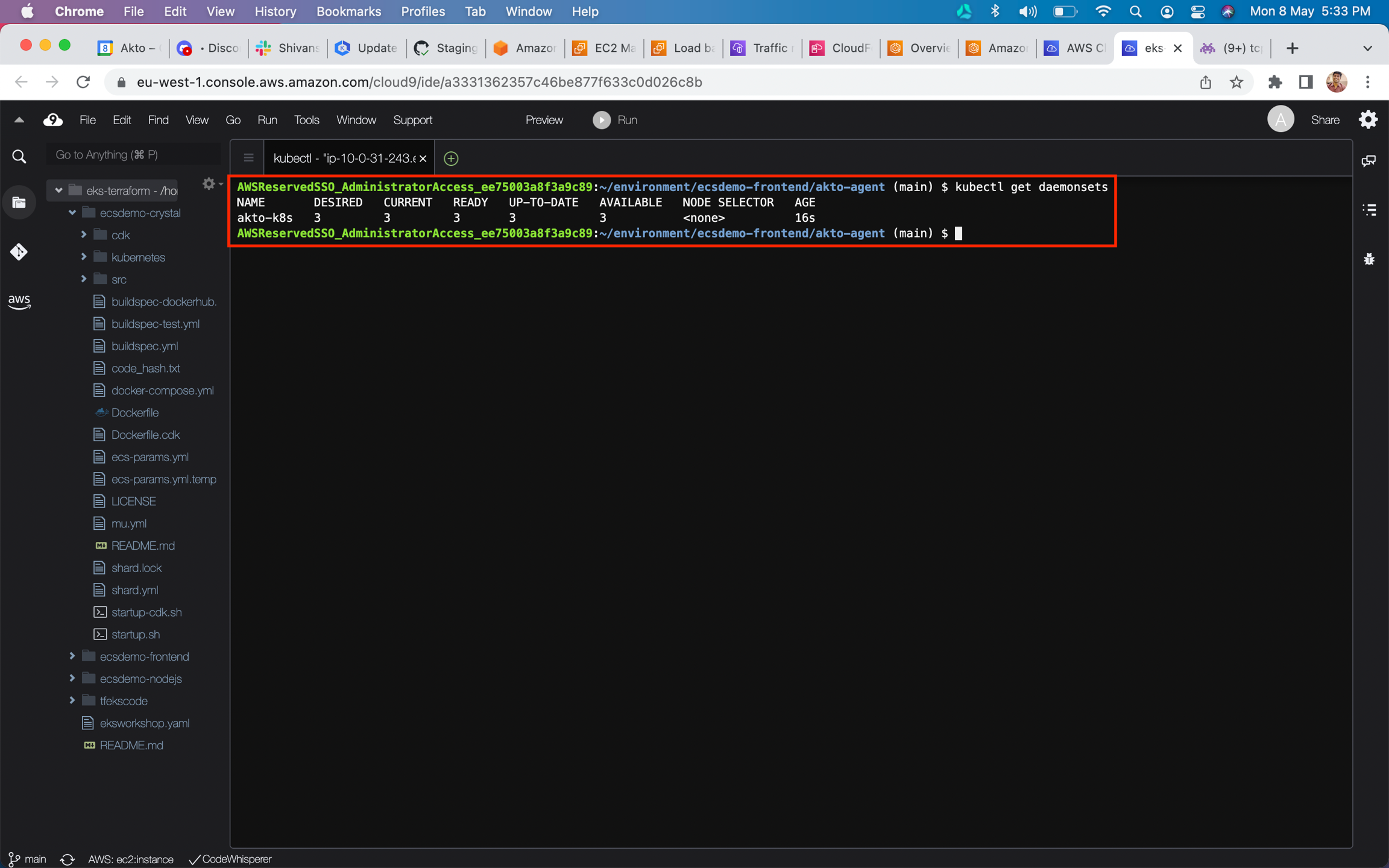

Run the command

kubectl get daemonsetsin terminal. It should show akto-k8s daemonset.

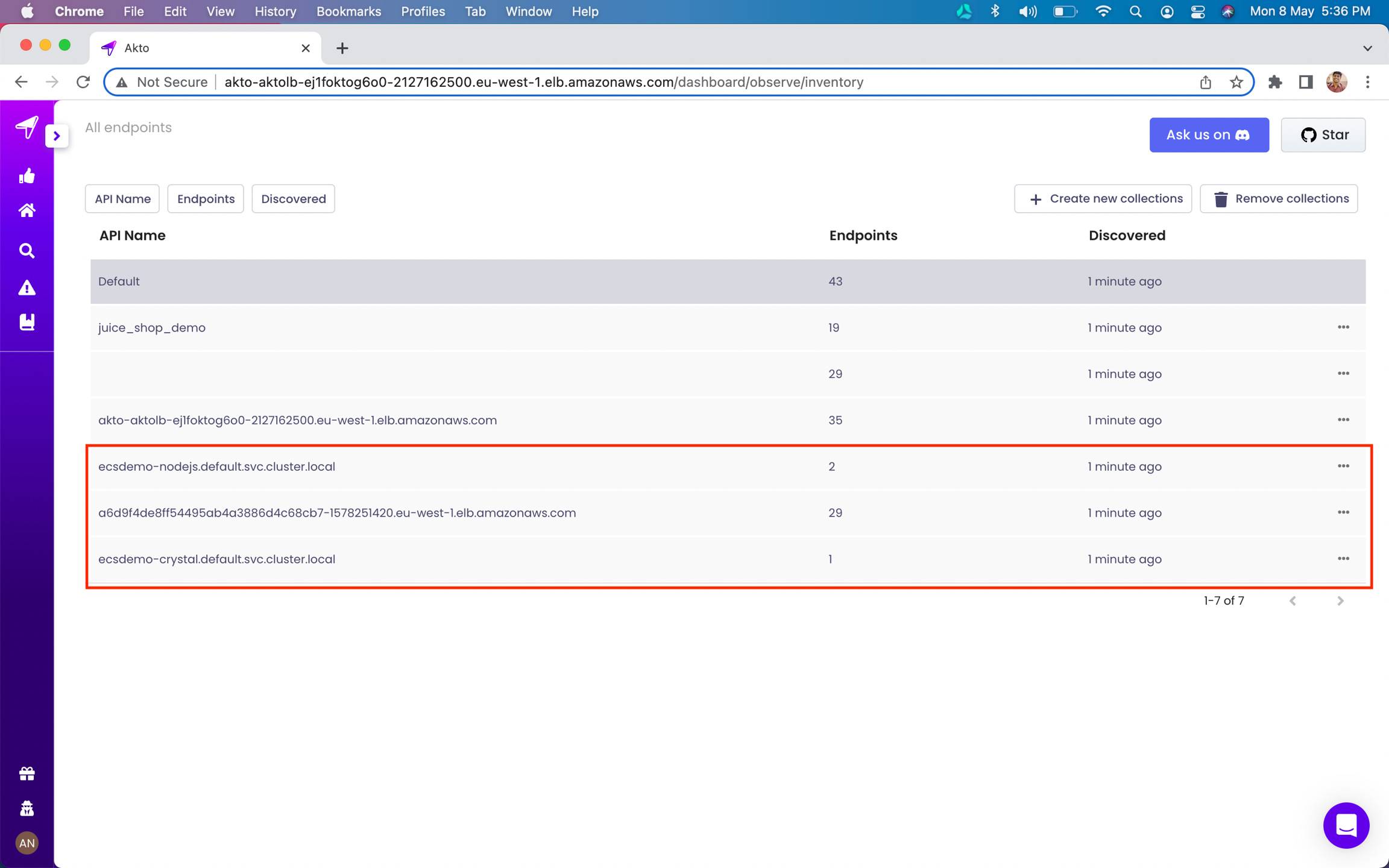

Go to

API Discoveryon Akto dashboard to see your new APIs

Frequently Asked Questions (FAQs)

The traffic will contain a lot of sensitive data - does it leave my VPC?

Data remains strictly within your VPC. Akto doesn't take data out of your VPC at all.

Does adding DaemonSet have any impact on performance or latency?

Zero impact on latency. The DaemonSet doesn't sit like a proxy. It simply intercepts traffic - very similar to tcpdump. It is very lightweight. We have benchmarked it against traffic as high as 20M API requests/min. It consumes very low resources (CPU & RAM).

Troubleshooting Guide

When I hit apply, it says "Something went wrong". How can I fix it?

Akto runs a Cloudformation template behind the scenes to setup the data processing stack and traffic mirroring sessions with your application servers' EC2 instances. This error means the Cloudformation setup failed.

Go to AWS > Cloudformation > Search for "mirroring"

Click on Akto-mirroring stack and go to Events tab

Scroll down to the oldest error event.

The Cloudformation template failed with "Client.InternalError: Client error on launch.". How should I fix it?

This is a known AWS common error. Follow the steps here.

The Cloudformation template failed with "We currently do not have sufficient capacity in the Availability Zone you requested... Launching EC2 instance failed."

You can reinstall Akto in a diff availability zone or you can go to Template tab and save the cloudformation template in a file. Search for "InstanceType" and replace all the occurrences with a type that is available in your availability zone. You can then go to AWS > Cloudformation > Create stack and use this new template to setup Traffic mirroring.

I am seeing kafka related errors in the daemonset logs

If you get an error like "unable to reach host" or "unable to push data to kafka", then do the following steps:

Grab the ip of the akto-runtime instance by running "kubectl get service -n {NAMESPACE}"

Use helm upgrade to update the value of

kafkaAdvertisedListenerskey toLISTENER_DOCKER_EXTERNAL_LOCALHOST://localhost:29092,LISTENER_DOCKER_EXTERNAL_DIFFHOST://{IP_FROM_STEP_1}:9092Put the same ip against the

AKTO_KAFKA_BROKER_MALas{IP_FROM_STEP_1:9092}in the daemonset config and reapply the daemonset config.

I don't see my error on this list here.

Please send us all details at [email protected] or reach out via Intercom on your Akto dashboard. We will definitely help you out.

Get Support for your Akto setup

There are multiple ways to request support from Akto. We are 24X7 available on the following:

In-app

intercomsupport. Message us with your query on intercom in Akto dashboard and someone will reply.Join our discord channel for community support.

Contact

[email protected]for email support.Contact us here.

Last updated